S3 Programmatic access with AWS-CLI

Table of contents

- S3:

- ✅Features of Amazon S3:

- Concepts in S3:

- How Amazon S3 works?

- Tasks:

- ➡️Launch an EC2 instance using the AWS Management Console and connect to it using Secure Shell (SSH).

- ➡️Create an S3 bucket and upload a file to it using the AWS Management Console.

- ➡️Access the file from the EC2 instance using the AWS Command Line Interface (AWS CLI).

In the previous blog, I discussed IAM Programmatic access with AWS CLI. In this blog, I will explain S3, and how to access it with AWS CLI.

S3:

Amazon S3 (Simple Storage Service) is a scalable object storage service that Amazon Web Services (AWS) provides.

It allows you to store and retrieve large amounts of data in a secure and highly available manner.

S3 is commonly used for various purposes, including backup and restore, data archiving, content distribution, and hosting static websites.

Here's the link to the official documentation of S3.

✅Features of Amazon S3:

Storage classes:

S3 offers different storage classes to optimize cost and performance based on your data access patterns. The available classes include Standard, Intelligent-Tiering, Standard-IA (Infrequent Access), One Zone-IA, Glacier, and Glacier Deep Archive.

Storage management:

Amazon S3 has storage management features that you can use to manage costs, meet regulatory requirements, reduce latency, and save multiple distinct copies of your data for compliance requirements.

Lifecycle management - You can use lifecycle management to automatically move your data to different storage classes as it ages. This can help you save money on storage costs.

Object lock - You can use object lock to prevent your data from being deleted or overwritten for a specified period. This can help you meet regulatory requirements.

Access control - You can use access control to control who can access your data. This can help you protect your data from unauthorized access.

Replication - You can replicate your data to multiple regions to improve availability and reduce latency.

Data backup - You can use Amazon S3 to back up your data to the cloud. This can help you protect your data from data loss.

Access management and security:

S3 offers various mechanisms to control access to your buckets and objects. Access Control Lists (ACLs) and Bucket Policies can define granular permissions for different users and applications.

By default, S3 buckets and the objects in them are private.

Data Processing:

To transform data and trigger workflows to automate a variety of other processing activities at scale, you can use the features like S3 Object Lambda and Event Notifications.

Storage logging and monitoring:

Amazon S3 provides logging and monitoring tools that you can use to monitor and control how your Amazon S3 resources are being used.

Analytics and insights:

Amazon S3 offers features to help you gain visibility into your storage usage, which empowers you to better understand, analyze, and optimize your storage at scale.

Strong consistency:

Amazon S3 provides strong read-after-write consistency for PUT and DELETE requests of objects in your Amazon S3 bucket in all AWS Regions.

Concepts in S3:

Buckets: S3 organizes data into containers called buckets. Each bucket has a globally unique name and serves as a logical container for objects.

Objects: Objects are the fundamental entities stored in S3. They consist of the data you want to store and associated metadata.

Keys: Keys are unique identifiers for objects within a bucket. They represent the object's path and can include prefixes and subdirectories to organize objects within a bucket.

S3 Versioning: S3 supports versioning, which enables you to store multiple versions of an object. This feature helps in tracking changes and recovering from accidental deletions or modifications.

Version ID: In Amazon S3, when versioning is enabled for a bucket, each object can have multiple versions. Each version of an object is assigned a unique identifier called a Version ID. The Version ID is a string that uniquely identifies a specific version of an object within a bucket.

Bucket policy: A bucket policy in Amazon S3 is a set of rules that define the permissions and access controls for a specific S3 bucket. It allows you to manage access to your S3 bucket at a more granular level than the permissions granted by IAM (Identity and Access Management) policies.

S3 Access Points: S3 Access Points in Amazon S3 provide a way to easily manage access to your S3 buckets. Access points act as unique hostnames and entry points for applications to interact with specific buckets or prefixes within a bucket. Here are some key points about S3 Access Points:

Access control lists (ACLs): ACLs (Access Control Lists) in Amazon S3 are a legacy method of managing access control for objects within S3 buckets. While bucket policies and IAM policies are the recommended methods for access control in S3, ACLs can still be used for fine-grained control in specific scenarios.

Regions: S3 is available in different geographic regions worldwide. When you create a bucket, you select the region where it will be stored. Each region operates independently and provides data durability and low latency within its region.

How Amazon S3 works?

You create a bucket. A bucket is like a folder that holds your data.

You upload your data to the bucket. You can upload files of any size, and you can even upload folders and subfolders.

You can access your data from anywhere. You can use the Amazon S3 website, the AWS Command-Line Interface (CLI), or any other application that supports Amazon S3.

Tasks:

✅Task 1:

➡️Launch an EC2 instance using the AWS Management Console and connect to it using Secure Shell (SSH).

➡️Create an S3 bucket and upload a file to it using the AWS Management Console.

➡️Access the file from the EC2 instance using the AWS Command Line Interface (AWS CLI).

Let's start working on these tasks.

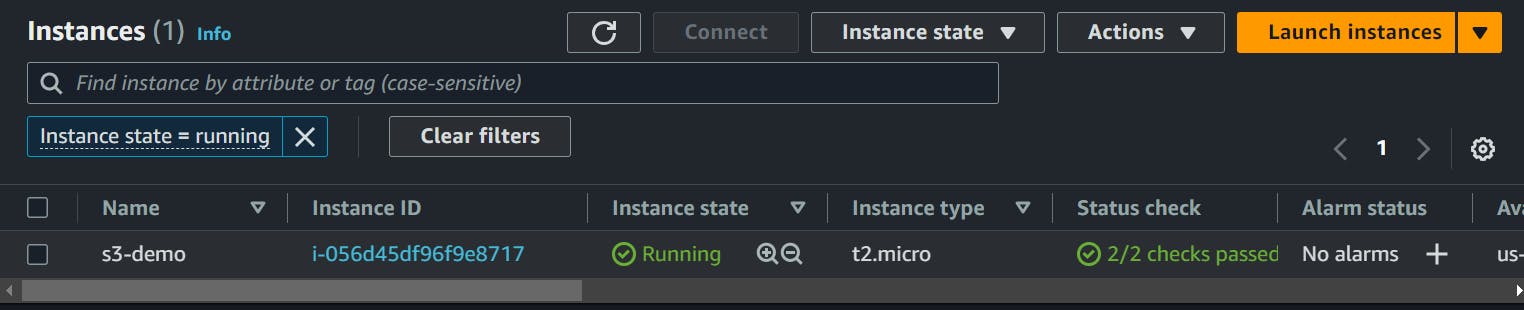

Launch an EC2 instance using the AWS Management Console and connect to it using Secure Shell (SSH).

Keep the configurations free tier to avoid any billing charges.

Connect to it using SSH:

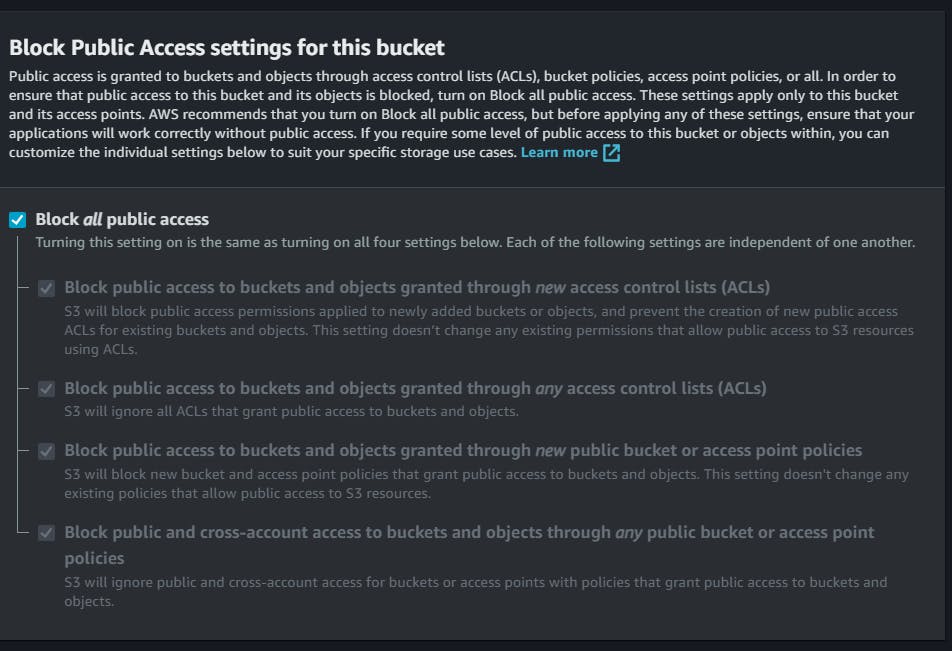

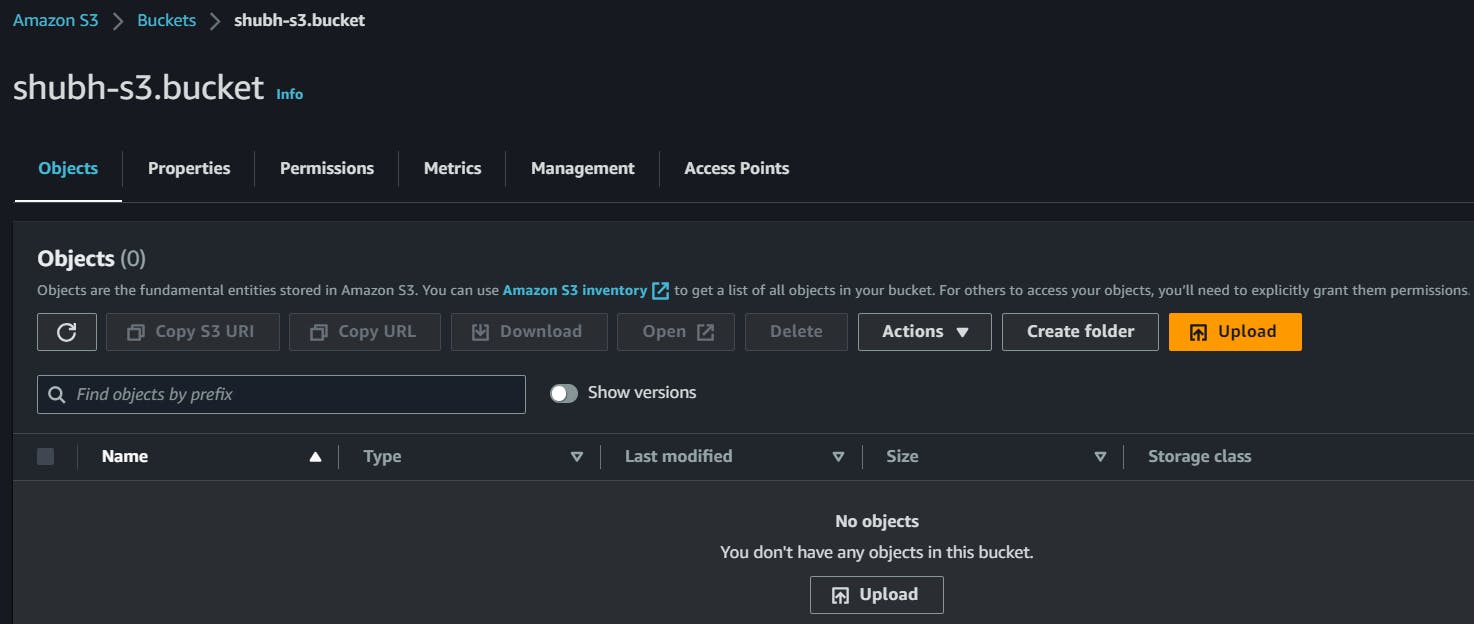

Create an S3 bucket and upload a file to it using the AWS Management Console.

Search S3 in the AWS Management Console.

Click on Create Bucket.

Bucket name: shubh-s3.bucket

You can give any name to the bucket as long as it is available. All the characters should be in lowercase and you can use characters like " . & -" etc.

Let the other options be the default and click on Create.

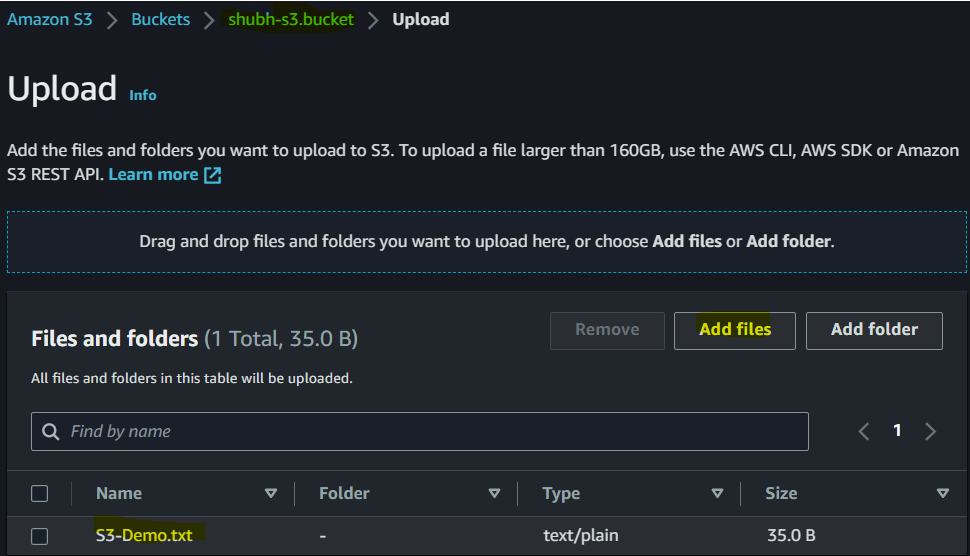

Let us upload a file to the bucket we created just now.

Click Open the bucket you just created. And in the objects section click on Upload.

Click on Add Files and select the file you want to upload from local.

And click on Upload.

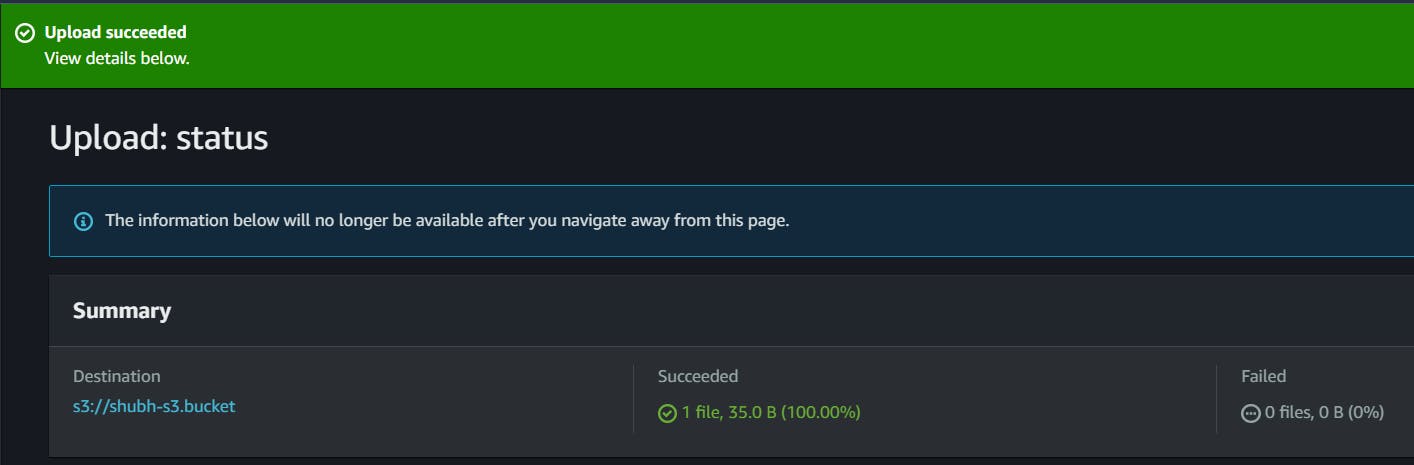

You can see that the File upload was successful.

Access the file from the EC2 instance using the AWS Command Line Interface (AWS CLI).

Here's the link to Install AWS CLI and configure it.

To check the S3 buckets present in CLI:

aws s3 ls

Let's create a file in the instance and upload it to the S3 using CLI.

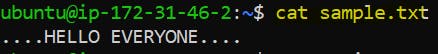

echo "...HELLO EVERYONE..." > sample.txt

ls

cat sample.txt

aws s3 cp sample.txt s3://BUCKET-NAME

This can be verified in the AWS Console: observe sample.txt

Let's download a file from the console to your local using CLI:

#aws s3 cp s3://bucket-name/file.txt .

aws s3 cp s3://shubh-s3.bucket/S3-Demo.txt .

ls

Let us sync the contents of the local folder with our bucket.

touch file{1..5}.txt

ls

#aws s3 sync <local-folder> s3://bucket-name

aws s3 sync . s3://shubh-s3.bucket

this command will start syncing the whole bucket to the local system as per the path provided.

This sync can be verified in the AWS management console.

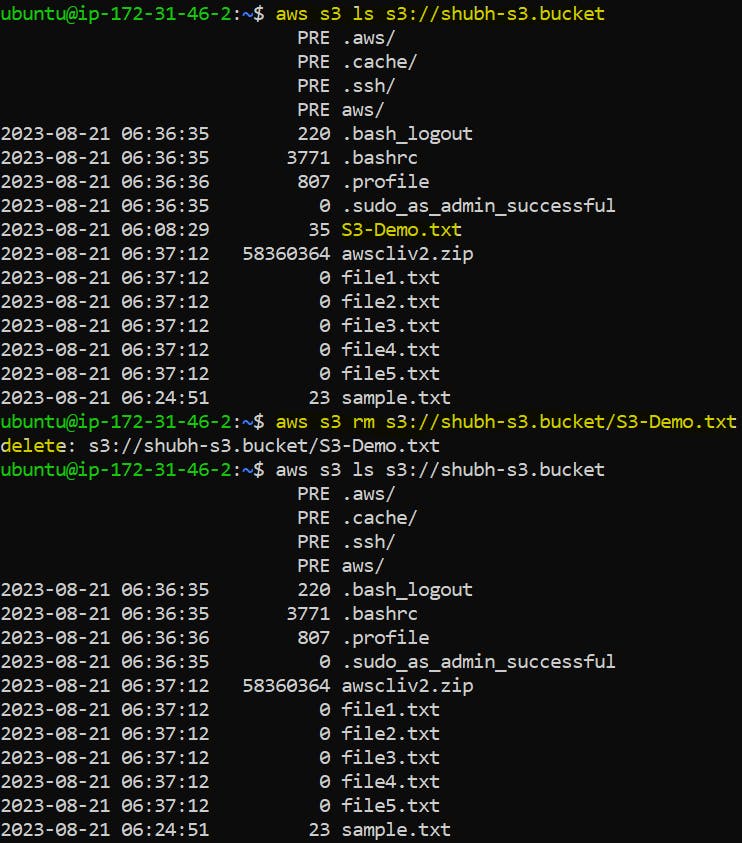

To list the objects in an S3 bucket:

aws s3 ls s3://bucket-name

To delete an object from an S3 bucket:

You can observe that the file name S3-Demo.txt present earlier is deleted successfully.

#aws s3 rm s3://bucket-name/file.txt

aws s3 rm s3://shubh-s3.bucket/S3-Demo.txt

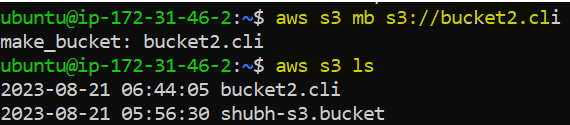

To create a new bucket. You can observe the bucket is deleted permanently with the command. Here mb= make bucket.

#aws s3 mb s3://bucket-name

aws s3 mb s3://bucket2.cli

aws s3 ls

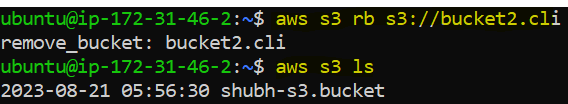

To delete a bucket from S3. You can observe the bucket is deleted permanently with the command. Here rb= remove bucket.

#aws s3 rb s3://bucket-name

aws s3 rb s3://bucket2.cli

In this way, task 1 is completed successfully.

✅Task 2:

➡️Create a snapshot of the EC2 instance and use it to launch a new EC2 instance.

➡️Download a file from the S3 bucket using the AWS CLI.

➡️Verify that the contents of the file are the same on both EC2 instances.

Create a snapshot of the EC2 instance and use it to launch a new EC2 instance.

In Amazon EC2, you can create a snapshot of an EBS (Elastic Block Store) volume to create a point-in-time copy of the data stored on the volume. This snapshot can be used to back up data, migrate volumes between regions, or create new volumes from the snapshot.

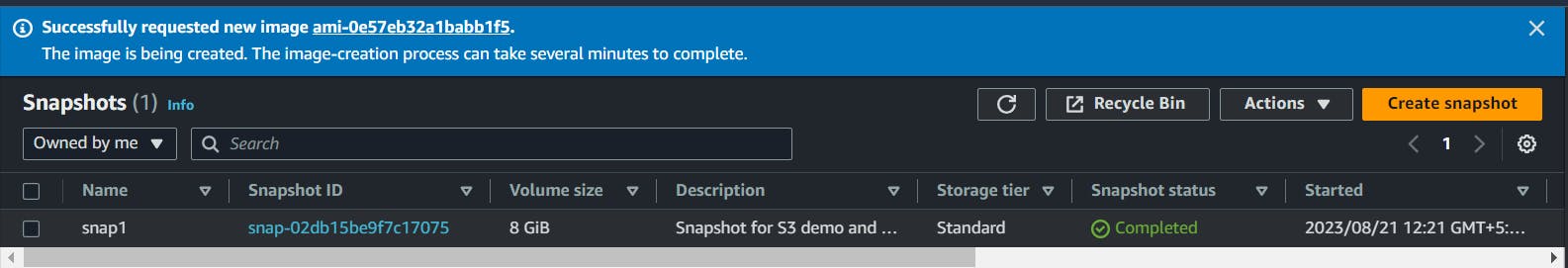

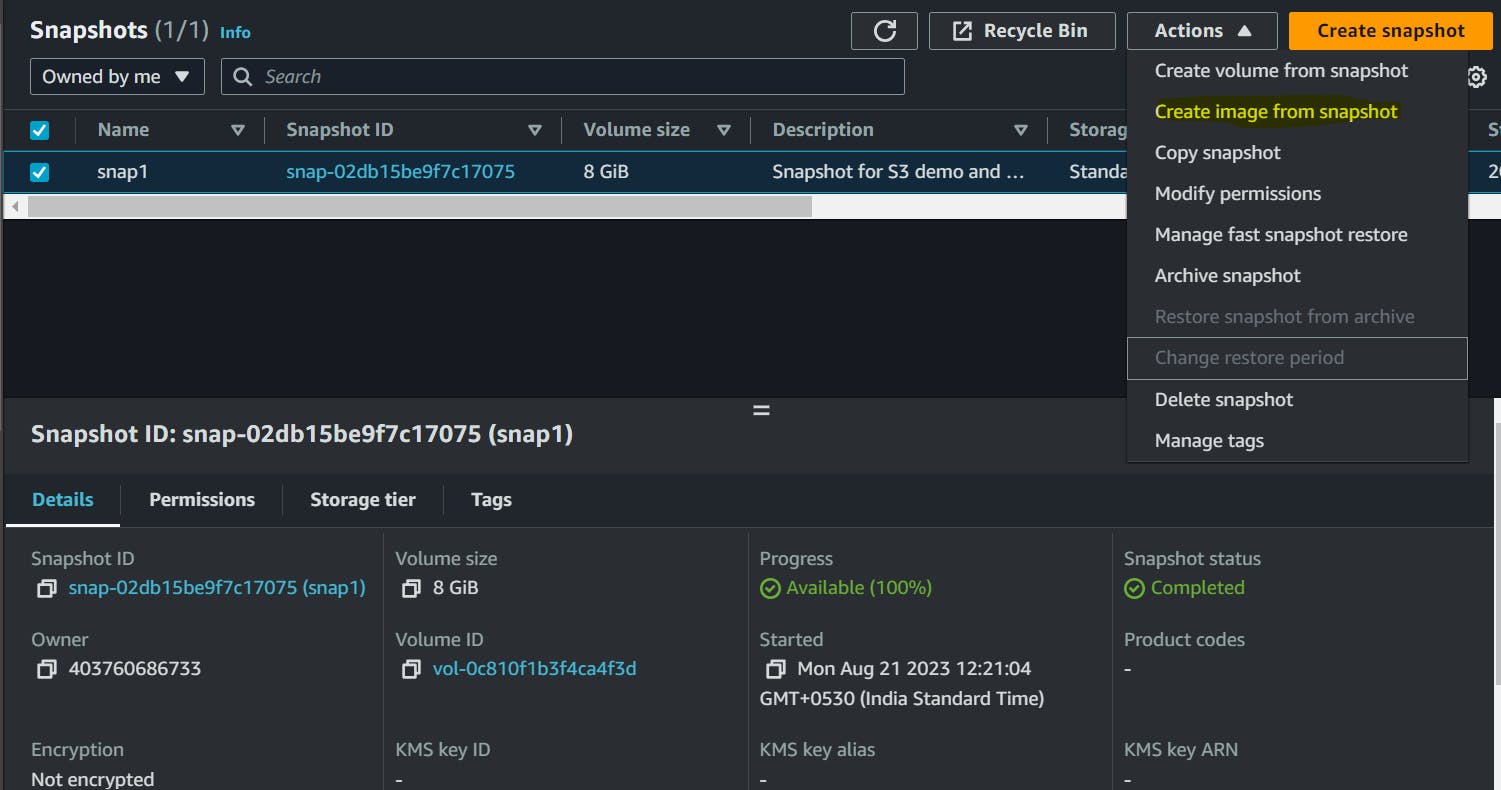

Search EC2 in the console > Scroll down to EBS (Elastic Block Store) > Select Snapshots.

Click on Create Snapshot > Select Instance > Select the Instance ID > Click on Create Snapshot

We can see the snapshot is created successfully as snap1.

Let us use this Snapshot to launch a new EC2 instance.

Select the Snapshot > Click on Actions > Select Create image from the snapshot.

Image Name: S3Practice

Description: Image from Snapshot

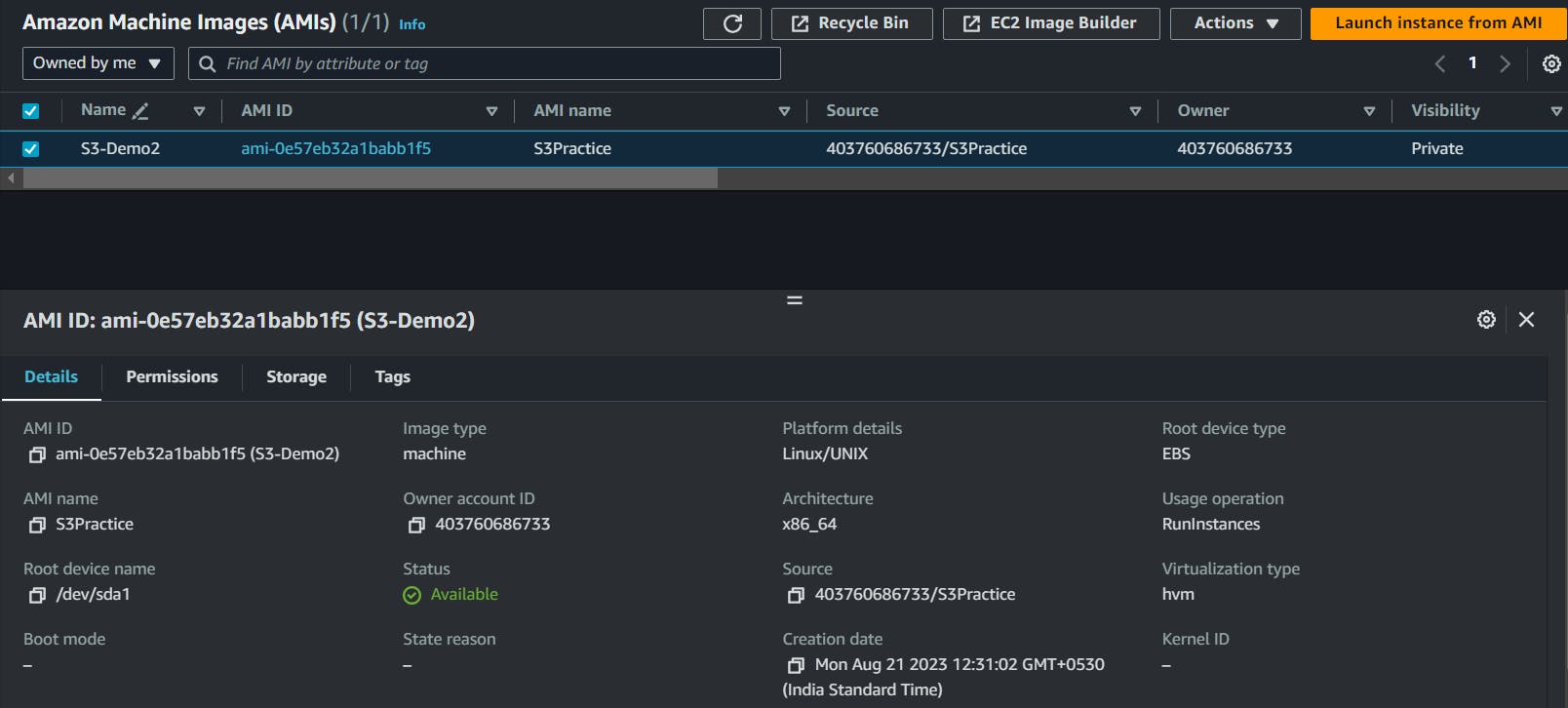

Click on Create Image. In the AMI section, you can observe that the AMI is created:

Select the Instance > Click on Launch Instance from AMI

Create an Instance named s3-demo2 and launch the instance with other required details. Connect to the Instance using SSH.

Download a file from the S3 bucket using the AWS CLI.

Verify that the contents of the file are the same on both EC2 instances.

Here we can observe that content of the file sample.txt in instance named s3-demo whose screenshot is attached below and the sample.txt file from another instance whose screenshot is attached above matched perfectly.

hence, we can verify that in both instances the files are the same.

And that's how we can access and modify S3 and its contents through CLI.

In this blog, I have discussed S3 programmatic access with AWS CLI. If you have any questions or want to share your experiences, please comment below. Don't forget to read my blogs and connect with me on LinkedIn and let's have a conversation.

In the next blog post, we will explore more advanced topics in the realm of DevOps.So, stay tuned and let me know if there is any correction.

please feel free to connect.

Git Hub Repo: Task Day43

Thank you for reading!